there are different units in a GPU:

- Stream Processors

- the Raster Engine

- Texture Mapping Units

- Render OutPut Units

However, these units aren’t put loose in a GPU. No, everything is organized, and it’s this organization that i will be able to attempt to inform you. And this is often where the notion of architecture comes in clearly.

A History of Architecture:

What is a micro-architecture? this is often the thanks to organize a GPU, with the aim of improving performance over the previous version. On the opposite hand, we must not make an amalgamation between micro-architecture and generation. Indeed, two cards are often of various generation and share an equivalent micro-architecture (GTX 680 and GTX 770 for example), or on the contrary be of the same generation, but being of various micro-architecture (GTX 750 and GTX 760 for example).

Architecture

To give a quick history of recent AMD cards, the HD 5000s had a VLIW5 architecture, while the HD 6000s had a VLIW4 micro-architecture, and therefore the HD 7000s also because the Rx 200s had GCN architectures.

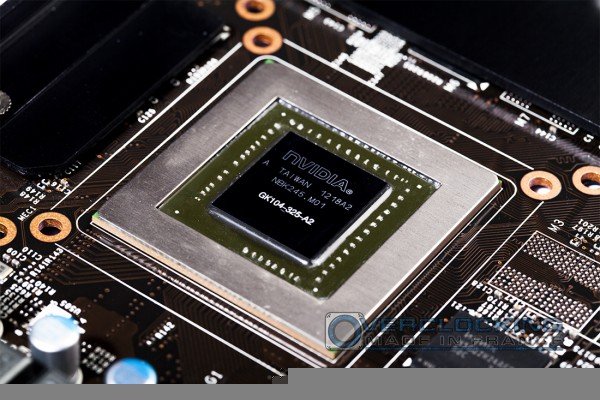

Nvidia, for its part, gives the names of famous physicists or mathematicians to its micro-architectures. Indeed the GTX 400 and 500 were on a Fermi architecture, the GTX 600 and 700 (except the GTX 750 and 750 Ti) were on Kepler, while the very recent GTX 900 are on a Maxwell architecture.

Full of Blocks

Like I said above, the compute units aren’t put loose in the GPU. And this is often where the concept of blocks comes in.

This is because GPUs are made from non-breaking blocks (which can’t be cut in half). counting on the architecture, these blocks have different names: Compute Units (CU) on AMD graphics cards in GCN, SMX on Nvidia Kepler cards, and SMM on Nvidia cards in Maxwell.

These blocks contain Stream Processors / CUDA Cores also as TMUs. for instance :

- a CU has 64 SP and 4 TMU

- an SMX has 192 SP and 16 TMU

- an SMM has 128 SP and eight TMU

Without going into details, we note that AMD has chosen smaller blocks (and more) while those of Nvidia are larger and fewer (although they need shrunk with Maxwell).

Take an Nvidia Kepler card, you’ll notice that its number of “hearts” is usually a multiple of 192. This multiple is that the number of SMX.

These blocks are rarely highlighted by Marketing, except on a couple of rare occasions. does one remember when, under the pretext of HSA technology, AMD said that its A10 7850K APU had 4 CPU cores and eight GPU cores? Well, these “GPU cores” are actually Compute Units. The graphics part integrated into the 7850K had 8 Compute Units, which makes 64 × 8 = 512 SP and 4 × 8 = 32 TMU.

Now, once I told you these blocks only had SPs and TMUs, that wasn’t quite true. Indeed, they even have low-level, in no time cache memory. little or no mentioned, this cache memory plays a task within the performance of a card.

Here is an example, to ascertain if you’ve got followed correctly. Below may be a Maxwell SMM, during this case the GM204, which powers the GTX 970 and 980 especially . you’ll compare this Maxwell block to a Kepler block (SMX), slightly below .

You can see the CUDA Cores (the “Cores”), also because the TMU (“Tex”), to not mention the cache memory (click on the image to enlarge).

We see here for instance that NVIDIA has greatly reduced its SMM block compared to the SMX so as to be ready to put more (passage from 192 to 128 CUDA Cores and from 16 to eight TMU). On the opposite hand, the cache memory has been increased to our great happiness.

Super Blocks

But these blocks, whether or not they are Compute Units, SMXs or SMMs also are gathered in what we’ll call Super Blocks. an excellent block features a Raster Engine (a unit capable, as a reminder, of generating triangles). If you do not know Raster Engines, then you haven’t read part 1 (which is here): bad! In any case, the more super blocks a map has, the more Raster Engine it’s , and therefore the faster it calculates triangles.

At Nvidia, the SMXs or SMMs (depending on whether we are handling a Kepler or Maxwell card) are grouped together in Super Blocks called Graphic Processing Clusters (GPCs). On the opposite hand, there’s no fixed rule for knowing the amount of SM in a GPC. But here is what we saw:

- on all Kepler architecture GPUs except the GK110 (i.e. everything except the Titan, GTX 780 and 780 Ti) have GPCs grouping 2 SMXs. Thus, the GTX 770 has 8 SMX grouped into 4 GPC, while the GTX 760 has 6 SMX grouped into 3 GPC. For its part, the GTX 650 Ti has 4 SMX grouped into 2 GPC.

- on the GK110, certainly so as to avoid the profusion of GPC, these don’t contain 2 but 3 SMX. Thus the GTX 780 Ti has 5 GPC (because 15 SMX) while the GTX 780 has 4 GPC (because 12 SMX)

- on Maxwell GM107 (GTX 750 and 750 Ti, having 4 and 5 SMM respectively) have just one GPC

- on Maxwell in GM204 and GM206, a GPC groups together 4 SMMs. Thus the GTX 980 has 4 full GPCs (because 16 SMMs) while the GTX 960 has 2 (because 8 SMMs).

Here may be a visual aid to know the cut that the GTX 760 has undergone compared to the GTX 770 (the hole at rock bottom right is that the deactivated GPC, reducing the amount of SMXs by 2 and therefore the number of Raster Engines by 1. compared to the GTX 760)

At AMD, the Compute Units are gathered in huge blocks called the Shader Engines. Unlike Nvidia, the amount of Super Bloc won’t change from one GPU variant to a different (take the instance of the GTX 760 and 770, supported the same GPU, but with a GPC cut within the case of the GTX 760). On the opposite hand, an excellent Block (Shader Engine) can have more or less Compute Units. Two constraints: an equivalent number of CUs in each Shader Engine, and no quite 16.

For example, the Tahiti GPU (R9 280 and R9 280X) is structured as 2 Shader Engine, except that the R9 280 has 28 CU (28 × 64 = 1792 SP), while the R9 280X has 32 (32 × 64 = 2048 SP). Well, it’s simple: within the case of the R9 280, each of the 2 Shader Engines will have 14 CUs to pay, while for the R9 280X, each Shader Engine will have 16 CU. It’s precisely the same principle with the opposite GPUs within the range, except that there’s more or less Shader Engine:

- Hawaii (R9 290 / 290X): 4 Shader Engine

- Tonga (R9 285): 4 Shader Engine

- Tahiti (R9 280 / 280X): 2 Shader Engine

- Pitcairn / Curacao (R9 270 / 270X): 2 Shader Engine

- Bonaire (R7 260 / 260X): 2 Shader Engine

- Cape Verde (R7 250X): 1 Shader Engine

- Oland (R7 240/250): 1 Shader Engine

Small example of what we wont to say “same number of CUs in each Shader Engine”. for instance the Hawaii GPUs have 4 Shader Engines, and 44 CUs within the case of the R9 290X (which therefore makes 11 CUs per Shader Engine). it’s impossible to get rid of one CU (and therefore attend 43). you’ve got to either remove one from each Shader Engine, or nothing in the least . Removing a CU from each Shader Engine increases the amount of CUs to 40 (and therefore 2560 SP). And tadaaam, here is an R9 290!

You can compare for instance below the 4 Shader Engines of a Hawaii GPU (R9 290X) and people of a Tonga GPU (R9 285). you’ll see that the Shader Engines are much less provided on the R9 285. (don’t forget that you simply can click to enlarge).

You can also see that the R9 290X has 8 64-bit memory controllers (written MC for Memory Controler on the diagram) against only 4 for Tonga.

Very helpful and interesting post.